2024-02-08: You Choose, LM Studio, WebAssembly and LLMs, Telepresence, Elastic profiling donation, Cilium 1.15¶

Thanks for reading the web version, you can subscribe to the Ops In Dev newsletter to receive it in your mail inbox.

👋 Hey, lovely to see you again¶

2024 brought new possibilities to run LLMs locally and learn how cloud-native technology (WebAssembly, Kubernetes, etc.) drives AI innovation. Besides learning new technology, this newsletter issue brings practical tools and tips for Dev, Sec and Ops. And if you prefer video content for learning, my new favorite is "You Choose" (more below in the Cloud Native section). Enjoy reading!

🤖 The Inner Dev learning AI/ML¶

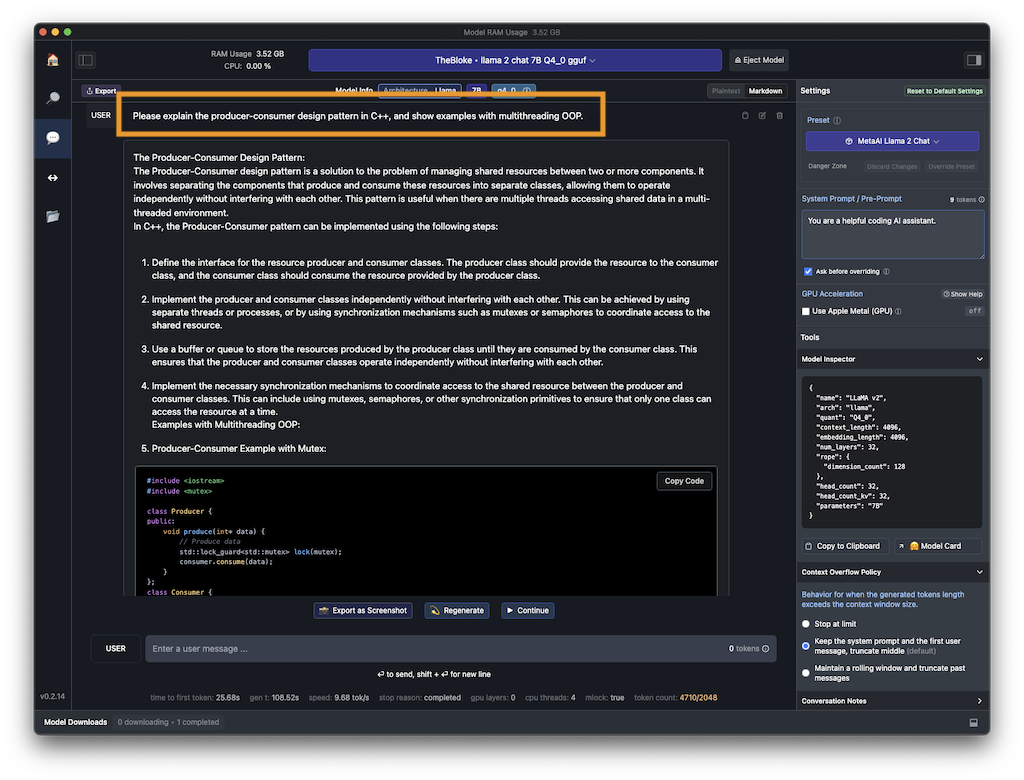

LM Studio allows you to discover, download and run local LLMs. Thanks, Sid Sijbrandij, for sharing in the January CEO AMA on GitLab Unfiltered. Next to the integrated chat UI, LM Studio also supports running a local API, where an OpenAI SDK library or compatible can interact programmatically. Ollama provides similar functionality.

The LM Studio screenshot shows a question I had in 2003, failing to understand the producer-consumer design pattern in C++ and how it relates to multithreading with shared resources: Please explain the producer-consumer design pattern in C++, and show examples with multithreading OOP. It uses the Meta LLama 2 model.

The full output is available in the web version of this newsletter.

Full LLama 2 response

The Producer-Consumer Design Pattern:

The Producer-Consumer design pattern is a solution to the problem of managing shared resources between two or more components. It involves separating the components that produce and consume these resources into separate classes, allowing them to operate independently without interfering with each other. This pattern is useful when there are multiple threads accessing shared data in a multi-threaded environment.

In C++, the Producer-Consumer pattern can be implemented using the following steps:

1. Define the interface for the resource producer and consumer classes. The producer class should provide the resource to the consumer class, and the consumer class should consume the resource provided by the producer class.

2. Implement the producer and consumer classes independently without interfering with each other. This can be achieved by using separate threads or processes, or by using synchronization mechanisms such as mutexes or semaphores to coordinate access to the shared resource.

3. Use a buffer or queue to store the resources produced by the producer class until they are consumed by the consumer class. This ensures that the producer and consumer classes operate independently without interfering with each other.

4. Implement the necessary synchronization mechanisms to coordinate access to the shared resource between the producer and consumer classes. This can include using mutexes, semaphores, or other synchronization primitives to ensure that only one class can access the resource at a time.

Examples with Multithreading OOP:

1. Producer-Consumer Example with Mutex:

#include <iostream>

#include <mutex>

class Producer {

public:

void produce(int* data) {

// Produce data

std::lock_guard<std::mutex> lock(mutex);

consumer.consume(data);

}

};

class Consumer {

public:

void consume(int data) {

// Consume data

std::lock_guard<std::mutex> lock(mutex);

}

private:

std::mutex mutex;

Producer producer;

};

int main() {

Producer producer;

Consumer consumer;

// Start the producer thread

std::thread producerThread(&Producer::produce, &producer, new int[10]);

// Start the consumer thread

std::thread consumerThread(&Consumer::consume, &consumer);

// Wait for the producer to finish producing data

producerThread.join();

return 0;

#include <iostream>

#include <condition_variable>

class Producer {

public:

void produce(int* data) {

// Produce data

std::cv_wait(&cv, &mutex);

consumer.consume(data);

}

};

class Consumer {

public:

void consume(int data) {

// Consume data

std::cv_wait(&cv, &mutex);

}

private:

std::condition_variable cv;

std::mutex mutex;

Producer producer;

};

int main() {

Producer producer;

Consumer consumer;

// Start the producer thread

std::thread producerThread(&Producer::produce, &producer, new int[10]);

// Start the consumer thread

std::thread consumerThread(&Consumer::consume, &consumer);

// Wait for the producer to finish producing data

producerThread.join();

return 0;

vLLM is a new open-source library for fast LLM inference and serving. It uses PagedAttention, a new attention algorithm that efficiently manages attention keys and values, which delivers up to 24x higher throughput than HuggingFace Transformers. Thanks to Priyanka Sharma for sharing.

Code generation with AI can be different to code completion tasks. When using different large language models for each task, the IDE integration needs to quickly analyze and identify the scope of the typed source code. I've met with Erran Carey for a coffee chat to learn how the GitLab Language Server for IDEs is built, and how it uses WebAssembly with TreeSitter for language parsing. This helps with intent detection for either code completion or code generation with Anthropic. You can learn more about language models used by GitLab here, and follow the new GitLab Duo Coffee Chat series on YouTube.

Ollama announced Python and Javascript libraries, making communicating and developing integrations easier, for example, with a Python library example for refining training data (source: gist, Twitter/X). Ollama Vision brings support for open-source multimodal models (source: Twitter/X).

Quick wins:

- Intro to Large Language Models (1 hour talk)

- Podcast: Open Source LLMs with Simon Willison

- Talk: What's wrong with LLMs and what we should be building instead by Tom Dietterich

- Mixing small language models and large ones for faster learning

- Personalizing AI – How to Build Apps Based on Your Own Data by Nico Meisenzahl

- A European OpenAI competitor is founded with NXAI by Sepp Hochreiter with Austrian industry partners and the University of Linz (source: heise.de (German)). Hochreiter developed the Long Short-Term Memory (LSTM) algorithm which helped improve AI applications in the 90ies.

🐝 The Inner Dev learning eBPF¶

Dan wrote a DNS packet parser for an eBPF program (example source), which allows manipulating DNS requests on the Kernel level. This could allow the inspection of DNS queries more efficiently and open doors for chaos engineering experiments with eBPF.

eBPF can also be used for penetration testing tools. eBPFeXPLOIT supports hiding PIDs, MemoryShell (injecting code into memory), block the kill command, hide injected eBPF programs, SSH and cron backdoors, etc. Many things I never heard of -- sounds frightening if this is used by malicious actors instead.

Quick wins:

- Monitoring Dynamic Linker Hijacking With eBPF

- The eBPF foundation published the "State of eBPF" report.

👁️ Observability¶

Elastic proposed to donate their continuous profiling agent to the OpenTelemetry CNCF project (issue). The technical committee is discussing the required steps, including licensing and reviewing the currently closed source. When this donation hopefully is fruitful, everyone can benefit from and contribute to continuous profiling. The Parca maintainers already joined the discussion to maybe merge the efforts into a unified OpenTelemetry profiling agent.

Coroot 0.26 supports effortless .NET runtime monitoring, without additional configuration. The metrics are collected with the Coroot node agent, using a fork of the pyroscope-io/dotnetdiag library.

Quick wins:

- Monitoring TLS Endpoint Certificate Expiration with Prometheus

- What you need to know before creating your first OpenTelemetry pipeline for tracing

🛡️ DevSecOps¶

If you are using a Fritzbox router from AVM, beware of using the local fritz.box domain. After the gTLD .box became available, someone registered the domain and serves NFTs (source: heise.de (German)). It could also become a target of phishing attacks, simulating a login portal form on fritz.box for example.

Google announced an Android emulator and iOS simulator right in the browser, through their Project IDX.

Chromium will support Rust, when third-party libraries are called from the C++ source code. Interop is limited to a single direction from C++, though. Google also announced a grant of $1 million to the Rust Foundation to help improve the interoperability between Rust and C++.

Zed is a new lightweight IDE and fully open-source. It is currently only available on macOS and continues to add support for more languages, etc., in the latest 0.121.5 release.

Quick wins:

- Measuring Developer Productivity: Real-World Examples is a deep dive by Gergely Orosz and Abi Noda into developer productivity metrics used by Google, LinkedIn, Peloton, Amplitude, Intercom, Notion, Postman, and 10 other tech companies.

- The Unique Role of DevOps, SRE, and Platform Engineering in Different Organizations

- Mastering Kubernetes security: Safeguarding your container kingdom

🌤️ Cloud Native¶

Telepresence is a CNCF sandbox project that allows you to intercept service/namespace traffic in remote Kubernetes clusters and tunnel it to your local machine. This method can help with development and testing and does not require additional ingress configuration. I learned about Telepresence while helping review a blog post how Telepresence works with cloud development environments in Gitpod.

WebAssembly System Interface (WASI) 0.2 is now based on the WebAssembly component model and provides a stable API for library developers. This is a huge step forward in improving the developer experience with WebAssembly, where higher-level libraries are needed for wider adoption.

The talk WebAssembly is becoming the runtime for LLMs shares an interesting shift from LLM apps in Python:

Today’s LLM apps, including inference apps and agents, are mostly written in Python. But this is about to change. Python is too slow, too bloated, and too complicated to install and manage. That’s why popular LLM frameworks, such as llama2.c, whisper.cpp, llama.rs, all thrive to have zero Python dependency. All those post-Python LLM applications and frameworks are written in compiled languages (C/C++/Rust) and can be compiled into Wasm.

At Rejekts NA 2023, I learned about the amazing series You Choose, where Viktor Farcic and Whitney Lee explain cloud-native concepts and let the audience decide interactively which CNCF projects to use in their walkthroughs. The Rejekts talk "Choose Your Own Adventure: The Perilous Passage to Production" is a great introduction about what to expect. If you want to jump right in, the latest episode is about Secrets Management.

Quick wins:

- How Kubernetes picks which pods to delete during scale-in

- What is Kube-Proxy and why move from iptables to eBPF?

- Docker vs. containerd vs. Podman - a learning thread on Twitter/X.

📚 Tools and tips for your daily use¶

- Building container images from scratch (with the scratch base image)

- How to Reverse-Proxy Applications on Subpaths with Traefik

- Debugging Distroless Containers with Docker Debug

- Quickemu allows to quickly create and run optimised Windows, macOS and Linux desktop virtual machines.

- Ksctl by KubeSimplify is a cloud agnostic Kubernetes management tool that thelps manage cluster running in multi-cloud environments.

- Zen is a simple, free and efficient ad-blocker and privacy guard for Windows, macOS and Linux.

- Chrome Developer Tools Tips: Hold shift while hovering over a request, and it highlights the initiator in green, and dependencies in red. Thanks Addy Osmani.

- Slack tip: When you prefix an emoji with

+, you can react to the previous message. Thanks Thomas Paul Mann.

AI/ML focussed tools:

- LlamaIndex is a data framework for LLM-based applications to ingest, structure, and access private or domain-specific data.

- E2B is a sandbox for AI apps and agents. It provides a long-running cloud environment to run any LLM (GPTs, Claude, local LLMs, etc.) and let it consume tools like done locally.

- Improve LLMs With Proxy-Tuning and avoid weight changes in the model. Sometimes an LLM can be too resource intensive to train, or the user does not have access to the LLM's weights.

🔖 Book'mark¶

- Book: Building a Cyber Risk Management Program, by Brian Allen, Brandon Bapst, Terry Allan Hicks, released December 2023.

- The complete WebAssembly course by KubeSimplify

- Awesome AI agents

- Awesome Platform Engineering Tools

🎯 Release speed-run¶

- Cilium 1.15 brings many enhancements, with Gateway API 1.0 support for Cilium as Kubernetes ingress, correlating traffic to network policies in Kubernetes, and much more.

- Kibana 8.12 supports Elastic's a new query language ES|QL. A short video shows how to edit a visualization within a dashboard.

- OpenTofu 1.6.1 provides bugfixes and performance improvements for the first stable 1.6.0 release after forking from HashiCorp Terraform.

- containerd 2.0.0-beta2 focusses on stability of features introduced in 1.x, and removes deprecated features, too.

- Quickwit support has been added to Falco log outputs.

- OpenLLMetry now supports Google Gemini (source: LinkedIn).

- Go 1.22 provides improvements to for loops: variable iteration safety, and support for ranges over integer values. Go 1.22 also includes the first “v2” package in the standard library, math/rand/v2.

🎥 Events and CFPs¶

- Mar 15: DevOpsDays LA in Pasadena, CA.

- Mar 18-20: SRECON Americas in San Francisco, CA.

- Mar 17-18: Cloud Native Rejekts in Paris, France.

- Mar 19-22: KubeCon EU 2024 in Paris, France.

- Apr 8-10: QCon London in London, UK.

- Apr 16-17: DevOpsDays Zurich in Winterthur, Switzerland.

- Apr 16-18: Open Source Summit NA 2024 in Seattle, Washington.

- May 7: KubeHuddle Toronto 2024 in Toronto, Canada.

- May 14-15: DevOpsDays Seattle in Seattle, WA.

- Jun 10-12: Monitorama 2024 in Portland, OR.

- Jun 17-21: Cloudland 2024 in Phantasialand near Cologne, Germany.

- Jun 19-21: DevOpsDays Amsterdam in Amsterdam, The Netherlands.

- Jun 20: Kubernetes Community Days Italy in Bologna, Italy.

- Jul 1-2: Kubernetes Community Days Munich 2024 in Munich, Germany.

- Sep 3-4: Container Days 2024 in Hamburg, Germany.

- Sep 16-18: Open Source Summit EU 2024 in Vienna, Austria.

- Sep 26-27: DevOpsDays London in London, UK.

- Nov 12-15: KubeCon NA 2024 in Salt Lake City, Utah.

👋 CFPs due soon

- May 7: KubeHuddle Toronto 2024 in Toronto, Canada. CFP closes Feb 9.

- Jul 1-2: Kubernetes Community Days Munich 2024 in Munich, Germany. CFP closes Mar 31.

- Sep 3-4: Container Days 2024 in Hamburg, Germany. CFP closes Mar 31.

Looking for more CfPs?

- Developers Conferences Agenda by Aurélie Vache.

- Kube Events.

- GitLab Speaking Resources handbook.

🎤 Shoutouts¶

Thanks for the laugh, Dragoi Ciprian.

Client did not pay? Add opacity to the body tag and decrease it every day until their site completely fades away.

🌐

Thanks for reading! If you are viewing the website archive, make sure to subscribe to stay in the loop! See you next month 🤗

Cheers, Michael

PS: If you want to share items for the next newsletter, just reply to this newsletter, send a merge request, or let me know through LinkedIn, Twitter/X, Mastodon, Blue Sky. Thanks!