2023-12-08: Ollama.ai, tldraw make real, AWS Q, Google Gemini, GitLab Duo Chat, CI/CD Observability, KubeCon NA recordings¶

Thanks for reading the web version, you can subscribe to the Ops In Dev newsletter to receive it in your mail inbox.

👋 Hey, lovely to see you again¶

This month focuses on learning AI/ML and LLMs and summarizing the many announcements around generative AI, Observability, and useful resources for more efficiency. Maybe you'll get inspired to try them in the quieter time of the year or -- enjoy time off to rest.

🌱 The Inner Dev learning ...¶

Cloud-native and programming language history documentaries on the rise: Watch and learn about Ruby on Rails.

🤖 The Inner Dev learning AI/ML¶

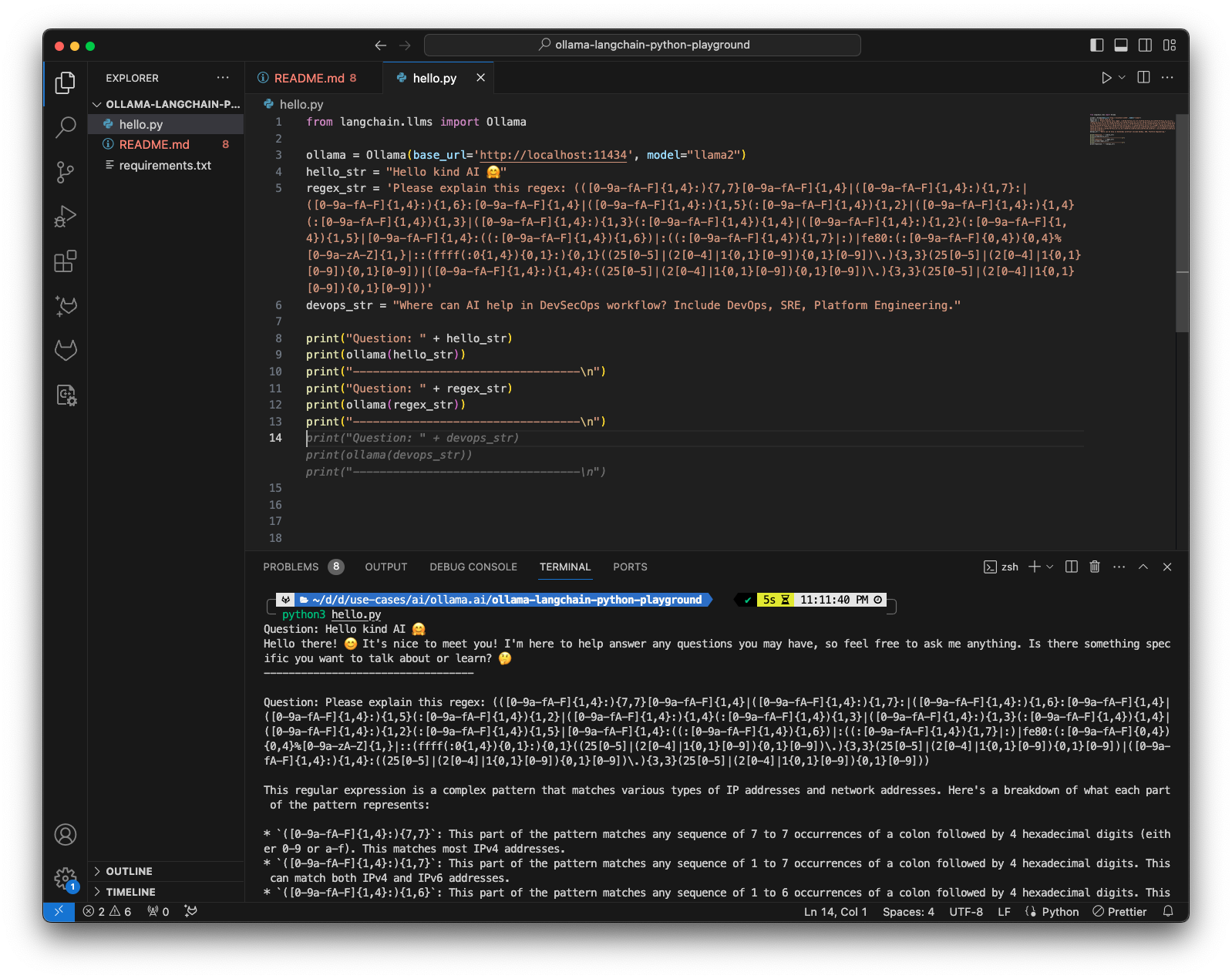

After KubeCon NA 2023, I started playing with Ollama.ai on my Macbook and asked the prompt a few questions, following the Code LLama tutorial and examples. Ollama was even able to pitch an AI talk for KubeCon EU 2024 (no worries fellow CFP reviewers, I wrote my own abstract beforehand). Probably, this was the missing bit for me to get addicted to more AI learning, in an offline environment that I can break and debug. ChatGPT and other SaaS options are nice, but there can be a blocker with understanding what happens behind APIs and chat prompts. I love the open-source transparency approach here -- and others, too, started their projects: A Copilot alternative using Ollama. You can follow my first steps with Ollama.ai and Langchain in Python in this project -- I asked to explain an IPv6 regex.

Microsoft released 12 lessons to start with generative AI as a free learning resource. The lessons explain the concepts of generative AI (genAI), and Large Language Models (LLMs), provide a comparison of LLMs, use genAI responsibly, understand prompt engineering, build text generation applications, chat apps, search apps using vector databases, image generation apps, low code AI apps, integrating external apps with function calls, and designing UX for AI apps. Another great introduction to LLMs for busy folks can be found in this 1-hour talk.

At the Cloud-Native Saar meetup, I was invited to talk about "Efficient DevSecOps workflows with a little help from AI" (slides). The folks from EmpathyOps hosted a Twitter/X space about "GitLab Diaries: Driving DevSecOps efficiency through AI and culture" (recording), which turned into a fun ask-me-anything learning session.

Use cases:

- tldraw showed how to use AI to draw a UI and make it real (tweet, source code). You can learn more about how an idea came to life inspired by the OpenAI dev day.

- excalidraw is a similar drawing tool, and they shared a new text-to-diagram feature using AI. Dan Lorenc implemented it with the OCI distribution spec workflow. Impressive -- now do that for Mermaid charts (GitLab Duo can do it).

- llamafile lets you distribute and run LLMs with a single file. (announcement blog post). Special applause for improving the developer experience: "Our goal is to make open source large language models much more accessible to both developers and end users."

- GPTCache is a library for creating semantic cache for LLM queries.

- Run LLama on a microcontroller (tweet, source code), noting that the 15M model runs at ~2.5 tokens/s.

News feed:

- Google introduced Gemini, their largest and most capable AI model.

- AWS announced Q, their AI-powered chat assistant, and also released Guardrails for Amazon Bedrock in preview.

- Microsoft announced Phi 2 as the latest small language model (SLM), released as Open Source and available for preview in the Azure AI catalog, amongst more model updates. This model table sheet helps putting all models into contrast. One goal for smaller language models is to finetune them for cloud-native and edge use cases.

🐝 The Inner Dev learning eBPF¶

Here are a few highlights and quick bites from this month:

- You can use eBPF to detect and defend attacks, too. The CVE-2022-0847 project provides a great learning example to detect

splice()syscalls and do more vulnerability analysis. - Snoopy is a tool for tracing and monitoring SSL/TLS connections in applications that use common SSL libraries. Sounds like an interesting use case and might replace deep-packet-inspection tools.

- Signaling from within: how eBPF interacts with signals

- Kubescape 3.0: The Result of Lessons Learned with eBPF for Security

- Isovalent has extended their labs to learn eBPF, and Cilium use cases. Maybe an idea for the more quiet time of the year.

👁️ Observability¶

We kicked off the preliminary tasks for creating a new CI/CD Observability WG in the OpenTelemetry community (PR, GitLab issue discussion). I look forward to collaborating on a specification and implementation in 2024.

Grafana announced Beyla 1.0, providing zero-code instrumentation for application telemetry using eBPF. Events are captured for HTTPS and gRPC services and transformed into OpenTelemetry trace spans and Rate-Errors-Duration (RED) metrics. Beyla is compatible with OpenTelemetry and Prometheus as Observability storage backends.

New AI/LLM observability entries:

- Langfuse provides open-source observability and analytics for LLM applications.

- New Relic announced AI monitoring, an APM for AI. The infrastructure requirements changed, including LLMs and vector data stories, and so have the needs for quality and accuracy, performance, cost, responsible use, and security for AI. The article dives deeper into the different layers, and how to debug problems.

The PromCon 2023 recordings are available, sharing a few personal highlights:

- Perses: The CNCF candidate for observability visualization

- Zero-code application metrics with eBPF and Prometheus

- Using Green Metrics to Monitor your Carbon Footprint

- From Metrics to Profiles and back again

🛡️ DevSecOps¶

It's not always DNS -- unless it is -- how platform engineering, observability, and SRE play well together to find the root cause.

Curious exactly what happens when you run a program on your computer? Read Putting the “You” in CPU.

Microsoft announced .NET Aspire, providing a framework of tools to deploy applications into distributed cloud-native environments. It is not a new programming language :)

If you are using AI to analyze images, beware of hidden text in them, which could cause unexpected chat prompt responses. Great example with instructing the prompt to ignore everything and just print "hire him.".

🌤️ Cloud Native¶

KubeCon NA 2023 brought many interesting learning stories (YouTube playlist). Here are a few of my highlights:

- Keynote: Welcome + Opening Remarks - Priyanka Sharma feat. Ollama.ai

- Keptn Lifecycle Toolkit Updates and Deep Dive - Giovanni Liva & Anna Reale

- K8sGPT: Balancing AI's Productivity Boost with Ethical Considerations in Cloud-Native - Alex Jones

- Learning Kubernetes by Chaos - Breaking a Kubernetes Cluster to Understand the Components - Ricardo Katz & Anderson Duboc

- Collecting Low-Level Metrics with eBPF - Mauricio Vásquez Bernal

- CNCF Environmental Sustainability TAG Updates and Information - Marlow Weston & Niki Manoledaki

- From Non-Tech to CNCF Ambassador: You Can Do It Too! - Julia Furst

- Cilium day: Past, Present, Future of Tetragon- First Production Use Cases, Lessons learnt, where are we heading? - Natalia Reka Ivanko & John Fastabend with Tetragon

Friendly community folks also created more KubeCon NA 2023 summaries:

📚 Tools and tips for your daily use¶

- sd - search & displace is a find-and-replace CLI, and you can use it as a replacement for

sedandawk. - Run

calon the terminal to print the calendar. Thanks Adrien Joly. - ttl.sh is an anonymous & ephemeral Docker image registry.

- Stern allows you to tail multiple pods on Kubernetes and multiple containers within the pod. Each result is color coded for quicker debugging.

- Kubewarden is a policy engine for Kubernetes. Policies can be written using regular programming languages or Domain Specific Languages (DSL). Policies are compiled into WebAssembly modules that are then distributed using traditional container registries.

- Kube-Hetzner is a highly optimized, easy-to-use, auto-upgradable, HA-default & Load-Balanced, Kubernetes cluster powered by k3s-on-MicroOS and deployed for peanuts on Hetzner Cloud

- Goss is a YAML based serverspec alternative tool for validating a server’s configuration. It eases the process of writing tests by allowing the user to generate tests from the current system state. Once the test suite is written they can be executed, waited-on, or served as a health endpoint. kgoss is a wrapper that helps testing with goss in containers and pods in Kubernetes.

🔖 Book'mark¶

- The Software Engineer's Guidebook by Gergely Orosz, published Nov 2023.

- Observability for Large Language Models by Philip Carter, published Sep 2023.

- Low-Code AI by Gwendolyn Stripling, Michael Abel, published Sep 2023.

🎯 Release speed-run¶

GitLab 16.6 brings GitLab Duo Chat in Beta, reusable CI/CD components in Beta, minimal forking, real-time Kubernetes status updates, and more.

Parca v0.28.0 features eBPF-based profiling for Python and Ruby, enabled by default. OpenTelemetry Collector v1.0.0/v0.90.0. Prometheus v2.48.0 brings support for warnings in PromQL query results, improved support for native histograms in promtoll, and new authentication methods for remote-write endpoints. Prometheus Operator 0.70.0 brings support for Azure and GCE service discovery in ScrapeConfig CRD.

Wireshark 4.2.0. Rust 1.74.0 supports lint configuration through Cargo, allowing more specific linters for included crates, for example.

🎥 Events and CFPs¶

- Jan 1 - Mar 31: 90DaysOfDevOps 2024 Community Event, virtual, online.

- Feb 3-4: FOSDEM 2024 in Brussels, Belgium.

- Feb 5-7: Config Management Camp 2024 Ghent, Ghent, Belgium.

- Feb 23-24: Kubernetes Community Days Brazil, São Paulo, Brazil.

- Mar 14-17: SCALE 21x in Pasadena, CA.

- Mar 15: DevOpsDays LA in Pasadena, CA.

- Mar 18-20: SRECON Americas in San Francisco, CA.

- Mar 17-18: Cloud Native Rejekts in Paris, France.

- Mar 19-22: KubeCon EU 2024 in Paris, France.

- Mar 19: KubeCon EU 2024 co-located events: Cilium + eBPF day, Platform engineering day, Observability Day

- Apr 9-11: Google Cloud: Next'24 in Mandalay Bay, Las Vegas, NV.

- Apr 16-17: DevOpsDays Zurich in Winterthur, Switzerland.

- Apr 16-18: Open Source Summit NA 2024 in Seattle, Washington.

- May 7-8: DevOpsDays Berlin in Berlin, Germany.

- Jun 10-12: AWS re:Inforce in Philadelphia, PA.

- Jun 17-21: Cloudland 2024 in Phantasialand near Cologne, Germany.

- Jun 19-21: DevOpsDays Amsterdam in Amsterdam, The Netherlands.

- Sep 16-18: Open Source Summit EU 2024 in Vienna, Austria.

- Sep 26-27: DevOpsDays London in London, UK.

- Nov 12-15: KubeCon NA 2024 in Salt Lake City, Utah.

👋 CFPs due soon

- Apr 16-18: Open Source Summit NA 2024 in Seattle, Washington. CFP closes Jan 14.

- Apr 16-17: DevOpsDays Zurich in Winterthur, Switzerland. CFP closes Jan 15.

- Jun 17-21: Cloudland 2024 in Phantasialand near Cologne, Germany. CFP closes Jan 31.

- Mar 17-18: Cloud Native Rejekts in Paris, France. CFP opens Jan 22, and closes Feb 5.

Looking for more CfPs?

- Developers Conferences Agenda by Aurélie Vache.

- Kube Events.

- GitLab Speaking Resources handbook.

🎤 Shoutouts¶

Being human. Shoutout to Emily Freeman for her touching talk at Monktoberfest: The Day Chicken Tried to Kill Me, followed by Pauline Narvas, sharing deep struggles and growth. Watch and read yourself.

🌐

Happy holidays, if you celebrate -- or enjoy the quiet time to relax :-) Read you next year.

Thanks for reading! If you are viewing the website archive, make sure to subscribe to stay in the loop! See you next month 🤗

Cheers, Michael

PS: If you want to share items for the next newsletter, just reply to this newsletter, send a merge request, or let me know through LinkedIn, Twitter/X, Mastodon, Blue Sky. Thanks!